-

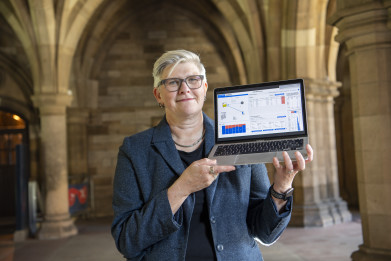

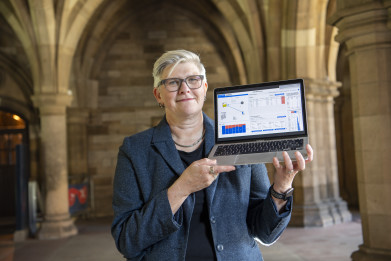

Simone Stumpf with a prototype human-in-the-loop feedback system displayed on a laptop

Simone Stumpf with a prototype human-in-the-loop feedback system displayed on a laptop

News & Views

How can AI Systems Learn to make Fair Choices

Jul 19 2022

“This problem is not only limited to loan applications but can occur in any place where bias is introduced by either human judgement or the AI itself. Imagine an AI learning to predict, diagnose and treat diseases but it is biased against particular groups of people. Without checking for this issue you might never know! Using a human-in-the-loop process like ours that involves clinicians you could then fix this problem,” Simone Stumpf

Researchers from the University of Glasgow and Fujitsu Ltd have teamed up for a year-long collaboration, known as ‘End-users fixing fairness issues’, or Effi - to help artificial intelligence (AI) systems make fairer choices by lending them a helping human hand.

人工智能已越来越多地融入医疗保健领域的自动化决策系统,以及银行业和一些国家的司法系统等行业。在被用来做决定之前,人工智能系统必须首先接受机器学习的“训练”,这将运行许多不同的人类决策的例子,它们将被要求做出决定。然后,它学习如何通过识别或“学习”一种模式来模拟做出这些选择。然而,这些决策可能会受到做出这些示例决策的人有意识或无意识偏见的负面影响。有时候,AI本身甚至会“叛变”并带来不公平。

Addressing AI system bias

The Effi project is setting out to address some of these issues with an approach known as ‘human-in-the-loop’ machine learning which more closely integrates people into the machine learning process to help AIs make fair decisions. It builds on previous collaborations between Fujitsu and Dr Simone Stumpf, of the University of Glasgow’s School of Computing Science, which have explored human-in-the-loop user interfaces for loan applications based on an approach called explanatory debugging; this enables users to identify and discuss any decisions they suspect have been affected by bias. From that feedback the AI can learn to make better decisions in the future.

“This problem is not only limited to loan applications but can occur in any place where bias is introduced by either human judgement or the AI itself. Imagine an AI learning to predict, diagnose and treat diseases but it is biased against particular groups of people. Without checking for this issue you might never know! Using a human-in-the-loop process like ours that involves clinicians you could then fix this problem,” Dr Stumpf told International Labmate.

Trustworthy systems urgently needed

“人工智能有巨大的潜力为广泛的人类活动和工业部门提供支持。然而,人工智能只有在经过训练后才会有效。人工智能与现有系统的更大整合有时会造成人工智能决策者反映其创造者的偏见的情况,这对最终用户不利。迫切需要建立可靠、安全和可信赖的能够作出公正判断的制度。人在回路的机器学习可以有效地指导决策人工智能的发展,以确保其发生。我很高兴能继续与富士通在艾菲项目上的合作,我期待着与我的同事和我们的研究参与者合作,推动人工智能决策领域的发展。”

富士通公司的富士通研究中心人工智能伦理研究中心的负责人Daisuke Fukuda博士说:“通过与Simone Stumpf博士的合作,我们探索了世界各地人们对人工智能公平感的不同看法。这项研究导致了将多种感官反映到人工智能中的系统的发展。我们认为与斯顿普夫博士的合作是使富士通的AI伦理学得以推进的有力手段。在这段时间里,我们将挑战新的问题,让AI技术基于人的思想来公平。随着整个社会包括产业界和学术界对AI伦理的需求不断增长,我们希望Stumpf博士和富士通继续合作,让富士通的研究为我们的社会做出贡献。”

More informationonline

Digital Edition

Lab Asia 29.4 - August 2022

August 2022

In This Edition Chromatography - Automated Sample Preparation:The Missing Hyphen to Hypernation - New Low Volume Air Sampler for PFAS Analysis - Analytical Intelligence Starts with the Samp...

View all digital editions

Events

ACS National Meeting & Expo, Fall 2022

Aug 21 2022Chicago, IL, USA & Online

Aug 22 2022Frankfurt, Germany

Aug 27 2022Maastricht, Netherlands

Aug 28 2022葡萄牙里斯本

Aug 31 2022Singapore

.jpg)